![Exchange-2010-Logo-733341[1]](https://eightwone.files.wordpress.com/2009/11/exchange-2010-logo-7333411.png?w=150&h=71) Part 2: Datacenter Activation Coordination Mode

Part 2: Datacenter Activation Coordination Mode

Part 3: DAC and Exchange 2010 SP1

On the list for potential writing subjects is Datacenter Activation Coordination mode found in Exchange 2010. But to understand Datacenter Activation Coordination, you need to know a thing or two about the Active Manager. So consider this a multi-part article, where I’ll first elaborate on the Active Manager.

Roles

The Active Manager is the director of High Availability for Exchange 2010 and runs on every mailbox server. An Active Manager running on non-DAG mailbox servers is called a Standalone Active Manager. When running in Standalone mode, the Active Manager checks Active Directory every 30 seconds for any topology changes. When it detects that the server has been added to a DAG, the Active Manager will switch to DAG mode.

Active Manager running on mailbox servers within a Database Availability Group (DAG) run in DAG mode and can have two different roles, Standby Active Manager (SAM) and Primary Active Manager (PAM). All mailbox servers within a DAG have the SAM role except for one mailbox server which has the PAM role. Which mailbox server runs PAM is determined by the quorum; PAM always follows the quorum ownership, which means if a server fails, the server which obtains quorum seizes the PAM role. Active Manager mode or role selection changes are logged in the event log:

- Active Manager changed from ‘[PAM|SAM|Standalone] ‘ to ‘[PAM|SAM|Standalone]’ (EventID 111);

- Active manager configuration change detected. (PreviousRole=’..’, CurrentRole=’..'”, …) (EventID 227);

- Active manager on this server is running as [PAM|SAM|Standalone] for duration xx:xx:xx.xx (AuthoritativeServer=…) (EventID 325).

You can also check which mailbox server currently holds the PAM role using the Get-DatabaseAvailabilityGroup cmdlet from the Exchange Management Shell in conjunction with the Status parameter. The value you should be looking for is the PrimaryActiveManager which contains the mailbox server currently holding the PAM role:

All Active Managers, both PAM and SAMs, are responsible for monitoring the health of databases mounted on the local server, from an information store as well as an Extensible Storage Engine (ESE) perspective. The actual monitoring is performed by the Exchange Replication Service which reports failures to the local Active Manager. When a failure is detected, the Active Manager notifies the PAM to initiate a fail-over. When the server with the PAM and having quorum fails, the quorum will move to another server and the PAM role will be seized by that server. Because PAM stores DAG state information in the cluster database (located on the quorum), the PAM role can move without consequences.

Activation Preference and Blocking

All this tracking of active and passive databases and coordinating database activation during switch-overs or fail-overs happens automatically. However, administrators can have some influence on the selection process. First they can configure the preferred activation order by using the Exchange Management Console.

The Activation Preference is configurable from the Exchange Management Shell by using the Set-MailboxDatabaseCopy cmdlet by specifying the ActivationPreference parameter:

Set-MailboxDatabaseCopy -Identity DB1\MBX1 -ActivationPreference 2

Another option that administrators have is to block activation at the server level using the Set-MailboxServer cmdlet in conjunction with the DatabaseCopyAutoActivationPolicy parameter:

Set-MailboxServer –Identity MBX3 – DatabaseCopyAutoActivationPolicy Blocked

The DatabaseCopyAutoActivationPolicy parameter can take 3 possible values:

- Blocked

Prevent automatic database activation on selected mailbox server;

- IntrasiteOnly

Only allow automatic activation within the same Active Directory site to prevent cross-site failover;

- Unrestricted

No restrictions.

The process PAM follows to recover after being notified or detection of failure are:

- Run Best Copy Selection (BCS) process;

- Run Attempt Copy Last Logs (ACLL) process;

- Issue mount request to appropriate MSExchangeIS process:

- If the mount is successful, make the database available to clients. Note that the Exchange Replication service will recover any lost messages from Transport Dumpster for the activated database;

- If the mounts fails, repeats steps 2-4 for the next best copy.

I’ll discuss these steps in the next paragraphs. Be advised that besides conducting the DAG, PAM is also responsible for providing information to other Exchange components, like the RPC Client Access service and Hub Transport Server for directing their client-side components to the appropriate mailbox server hosting the active copy of a database.

Best Copy Selection

The Best Copy Selection process starts by creating a list of available copies, ignoring unreachable or blocked entries. It then sorts the list on the amount of data loss which is based on the Copy Queue Length. It then uses a set of 10 criteria to try to determine which database copy to activate. When multiple mailbox servers have an equal copy queue length number, the Activation Preference is used as secondary sort key to order these.

This is the ordered list of criteria used by the BCS to determine the best copy. The conditions in both Copy Queue Length and Replay Queue Length are related to the number of log files:

| Criteria Nr. |

Copy Queue Length |

Replay Queue Length |

Content Index Status |

Database Status |

| 1 |

<10 |

<50 |

Healthy |

Healthy, DisconnectedAndHealthy, DisconnectedAndResynchronizing or SeedingSource |

| 2 |

<10 |

<50 |

Crawling |

Healthy, DisconnectedAndHealthy, DisconnectedAndResynchronizing or SeedingSource |

| 3 |

|

<50 |

Healthy |

Healthy, DisconnectedAndHealthy, DisconnectedAndResynchronizing or SeedingSource |

| 4 |

|

<50 |

Crawling |

Healthy, DisconnectedAndHealthy, DisconnectedAndResynchronizing or SeedingSource |

| 5 |

|

<50 |

|

Healthy, DisconnectedAndHealthy, DisconnectedAndResynchronizing or SeedingSource |

| 6 |

<10 |

|

Healthy |

Healthy, DisconnectedAndHealthy, DisconnectedAndResynchronizing or SeedingSource |

| 7 |

<10 |

|

Crawling |

Healthy, DisconnectedAndHealthy, DisconnectedAndResynchronizing or SeedingSource |

| 8 |

|

|

Healthy |

Healthy, DisconnectedAndHealthy, DisconnectedAndResynchronizing or SeedingSource |

| 9 |

|

|

Crawling |

Healthy, DisconnectedAndHealthy, DisconnectedAndResynchronizing or SeedingSource |

| 10 |

|

|

|

Healthy, DisconnectedAndHealthy, DisconnectedAndResynchronizing or SeedingSource |

Note that there are two special situations which might occur after PAM determined the best copy:

- When multiple copies turn out to be elegible, the activation order setting, configured by the Activation Preference setting, will be decisive. For example, when copy A with an Activation Preference of 1 and copy B with an Activation Preference of 4 both end up ex aequo, copy A will become active because of its lower Activation Preference setting;

- When no database copy is found to be elegible for activation, the administrator has to fix the issue by bringing one of the database copies to a state where it satisfies BCS criteria or by activating a database accepting potential significant data loss.

The Best Copy Selection results logs the following event in the eventlog:

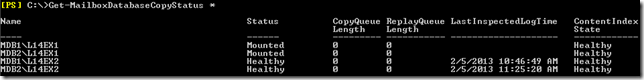

Got Logs?

Next, the Attempt Copy Last Logs (ACLL) process is initiated. This process is performed by the Exchange Replication Service on the server selected for the active copy. ACLL will try to copy any missing log files from the best source. It does this by querying their availability and health status but also the LastLogInspected values (which is a log generation number, the LastInpectedLogTime is the timestamp generation took place). The server with the highest LastLogInspected value (i.e. latest LastInspectedLogTime) is considered the best source for copying log files from.

You can also check the timestamp values by opening the Status tab of the database Properties view after selecting the copy you wish to inspect:

AutoDatabaseMountDial

After determining the best source, ACLL tries to replicate the log files from this source. If all missing log files could be replicated from this source, the database is mounted without loss. If for some reason replication was unsuccessful or the set of log files on the source is incomplete (missing logs), an amount of data is missing. Here is where the AutoDatabaseMountDial parameter becomes important. This setting, which is defined on server level using the Set-MailboxServer cmdlet, defines what number of missing logs ACLL finds acceptable before it will mount a database.

The AutoDatabaseMountDial can have the following settings:

- BestAvailability (default)

Mount the database if the copy queue length ≤12. Those logs are replicated and the database is mounted;

- GoodAvailability

Mount the database if the copy queue length ≤6. Those logs are replicated and the database is mounted;

- Lossless

Only mount the database if the copy queue length is 0, meaning all logs on the original active copy have been replicated. In that case the database is mounted.

If the amount of missing logs is outside the range of the configured minimum, the administrator must take action by either recovering the missing logs or by forcing a database mount.

Finally, if ACLL reports success to PAM, PAM will try to mount the database by contacting the MSExchangeIS process on the selected server with the instruction to mount a certain database as active. An exception to this is when the maximum number of active databases for that server has been reached. This setting can be configured using Set-MailboxServer by specifying a numeric value between and for MaximumActiveDatabases:

Set-MailboxServer -Identity MBX2 -MaximumActiveDatabases 50

When the maximum number of active databases has been reached, PAM will move on to the next best copy.

Example

All this information calls for an example. In this example we have a DAG with four members, server MBX1-4. The DAG consists of 1 mailbox database, for which the copy on MBX1 is currently active. MBX2-4 contain a passive copy; the copy on MBX4 is lagged.

Given the information that all of the database copies are healthy, the copy on the MBX4 is blocked for activation (which is a best practice for lagged copies) and AutoDatabaseMountDial is left at BestAvailability, the current state of the DAG is as follows:

| Database |

Activation Preference |

Copy Queue Length |

Replay Queue Length |

Content Index Status |

| MBX2\DB1 |

2 |

5 |

50 |

Healthy |

| MBX3\DB1 |

3 |

50 |

25 |

Crawling |

| MBX4\DB1 |

4 |

25 |

2500 |

Healthy |

MBX1 experiences a failure. The PAM , after detecting failure, will start with the Best Copy Selection process:

- The potential copies are sorted based on their copy queue length. The list PAM will use is {MBX2, MBX3}. MBX4 is skipped since it is blocked for automatic activation. Since there is no mailbox servers with identical copy queue lengths, the Activation Preference isn’t consulted.

- The criteria are held against the candidate list. MBX2 matches criteria no.6 and MBX3 matches criteria no.4. Since PAM finds a match on MBX3 first (MBX3 matches the highest criteria, i.e. 4<6), MBX3 is selected for fail-over.

The ACLL process is now asked to try copying missing log files from the original source to MBX3. Since MBX1 is still failing, it cannot, so the missing number of log files remains at 50. Because the AutoDatabaseMountDial is set to BestAvailability, which means it requires the missing log files to be 12 or less, ACLL will fail and notifies PAM.

PAM will now try the next best copy. The next best copy is MBX2, which matched criteria 6. Again, ACLL is asked to copy the missing log files to MBX2. MBX1 is still failing, so it the missing log files remain at 5. However, this number satisfies the AutoDatabaseMountDial requirement of 12 or less, so ACLL reports success to PAM. In turn, PAM will activate the database on MBX2.

The story doesn’t end here because the Hub Transport Servers will be asked to resubmit any messages from a certain point in time. But since this article is about Active Manager, I’ll dedicate another article to that some time.

Manual Override

You read that circumstances might require the administrator to take action to get a database mounted. For example, this can happen with unhealthy databases or with an AutoDatabaseMountDial setting of Lossless. The administrator can fix the issue, with potential data loss, by overriding the Best Copy Selection criteria or AutoDatabaseMountDial setting.

The cmdlet to activate a database on another server is Move-ActiveMailboxDatabase and specifying the ActivateOnServer parameter. Used without additional parameters, the cmdlet is still subject to the BCS criteria and AutoDatabaseMountDial settings. To override these, use one or more of the following parameters:

- SkipLagChecks

Overrides the criteria for copy and replay queue lengths;

- MountDialOverride

Overrides the AutoDatabaseMountDial setting. Possible options are GoodAvailability (max. 6 logs missing), BestAvailability (max. 12 missing), Lossless (no logs lost), None (use AutoDatabaseMountDial setting) and BestEffort. BestEffort is only available with manual activation and accepts ANY number of missing logs. Note that by default Lossless will be used when nothing is specified; If you want to use the AutoDatabaseMountDial setting of the database, specify MountDialOverride:None;

- SkipClientExperience

Skip checking the Content Index health state. This means you may activate a mailbox database copy with an incomplete or damaged index so you it might need recrawling;

- SkipHealthChecks

Skip database health checks.

Note that you can also perform manual activation from the Exchange Management Console, but there you can only specify the MountDialOverride parameter:

Divergence

When the original mailbox server which held the original active copy comes back online, the copy will be passive because another mailbox server holds the PAM role. Obviously, the copy will be outdated and needs fixing. This state is called divergence.

When divergence is detected by the Exchange Replication Service, an incremental reseed will take place. To bring the copy up to date, it first will need to determine the divergence point by comparing log files. It will then ask the mailbox server holding the active copy for pages containing data that has changed since the divergence point. These pages are replayed in the database until the passive copy is in sync again; the other mailbox servers will ignore those pages (which are transported using log files). When in sync, the copy will remain passive.

Note that divergence can also be caused when the administrator take a (passive copy of) a database offline.

Summary

The Active Manager manages database copies, coordinates activation and determining actions when a fail-over needs to take place. The Active Manager can have three roles, depending on if a DAG is used and if its responsible for managing that DAG. Automatic activation of databases after fail-over is determined by a set of rules; the behaviour can be influenced or overridden by the administrator who must accept potential consequences like data loss.

You normally don’t have to worry about it these details, but to understand the high availability concepts introduced with Exchange Server 2010 properly a thing or two must be known about the technologies involved. In one of the next articles I want to discuss Datacenter Activation Mode, which relies on the Active Manager.

I received a question on if it was possible to decommission a DAG, so that the Exchange 2010 servers would become stand-alone Exchange servers and the databases remain available on one server, freeing up other mailbox servers. I assume the customer has valid reasons for wanting to do so, like downsizing without requirements justifying the DAG. To answer that question: of course that is possible. Now, while many blogs are happy to tell you how to create a DAG there aren’t many on how to dismantle one, so here goes.

I received a question on if it was possible to decommission a DAG, so that the Exchange 2010 servers would become stand-alone Exchange servers and the databases remain available on one server, freeing up other mailbox servers. I assume the customer has valid reasons for wanting to do so, like downsizing without requirements justifying the DAG. To answer that question: of course that is possible. Now, while many blogs are happy to tell you how to create a DAG there aren’t many on how to dismantle one, so here goes.

![Exchange-2010-Logo-733341[1]](https://eightwone.files.wordpress.com/2009/11/exchange-2010-logo-7333411.png?w=150&h=71)